9. Develop a program to implement the Naive Bayesian classifier considering Olivetti Face Data set for training. Compute the accuracy of the classifier, considering a few test data sets.

PROGRAM:

import numpy as np

from sklearn.datasets import fetch_olivetti_faces

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

import matplotlib.pyplot as plt

data = fetch_olivetti_faces(shuffle=True, random_state=42)

X = data.data

y = data.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

gnb = GaussianNB()

gnb.fit(X_train, y_train)

y_pred = gnb.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {accuracy * 100:.2f}%')

print("\nClassification Report:")

print(classification_report(y_test, y_pred, zero_division=1))

print("\nConfusion Matrix:")

print(confusion_matrix(y_test, y_pred))

cross_val_accuracy = cross_val_score(gnb, X, y, cv=5, scoring='accuracy')

print(f'\nCross-validation accuracy: {cross_val_accuracy.mean() * 100:.2f}%')

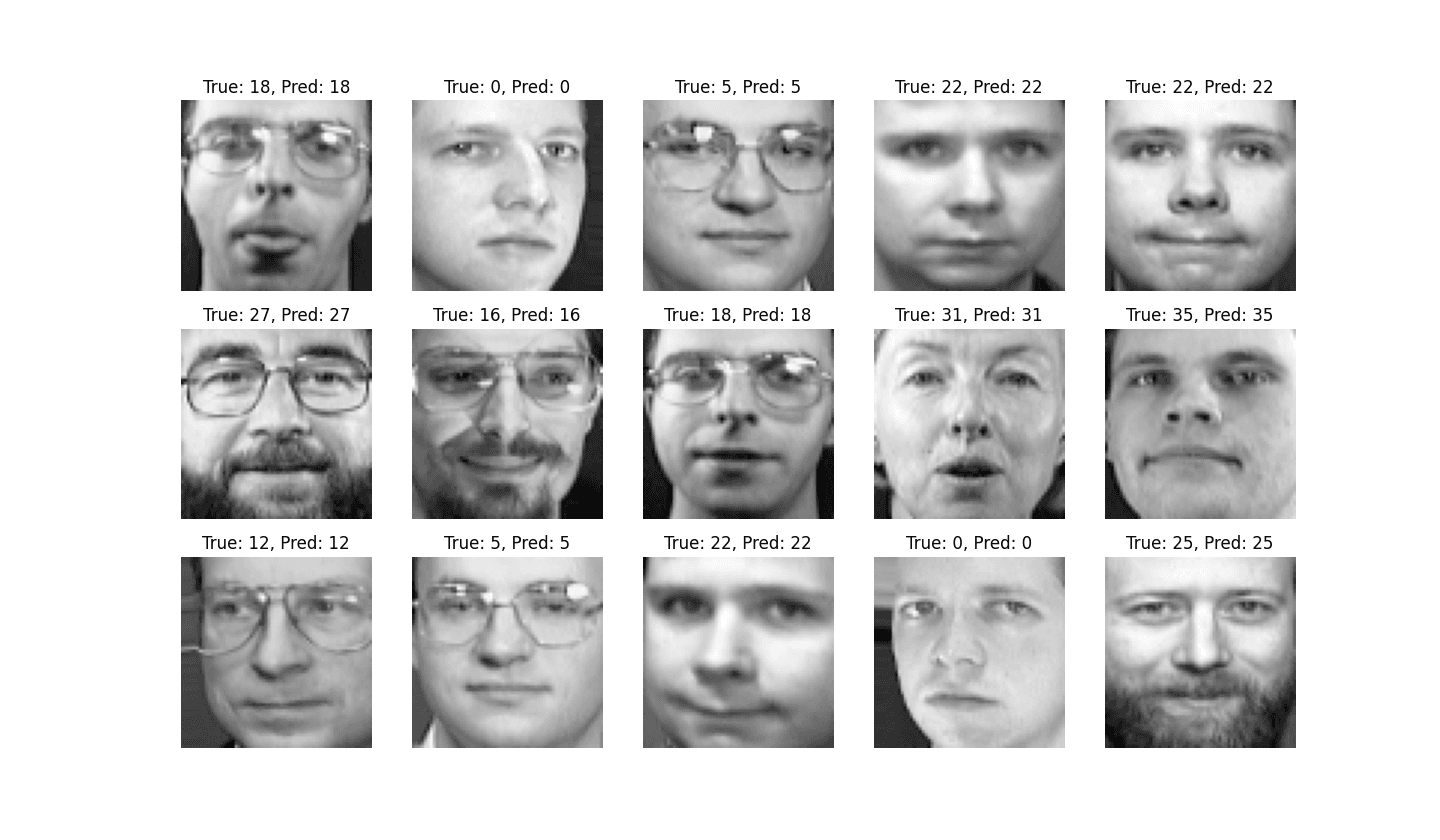

fig, axes = plt.subplots(3, 5, figsize=(12, 8))

for ax, image, label, prediction in zip(axes.ravel(), X_test, y_test, y_pred):

ax.imshow(image.reshape(64, 64), cmap=plt.cm.gray)

ax.set_title(f"True: {label}, Pred: {prediction}")

ax.axis('off')

plt.show()OUTPUT:

Accuracy: 80.83%

Classification Report:

precision recall f1-score support

0 0.67 1.00 0.80 2

1 1.00 1.00 1.00 2

2 0.33 0.67 0.44 3

3 1.00 0.00 0.00 5

4 1.00 0.50 0.67 4

5 1.00 1.00 1.00 2

7 1.00 0.75 0.86 4

8 1.00 0.67 0.80 3

9 1.00 0.75 0.86 4

10 1.00 1.00 1.00 3

11 1.00 1.00 1.00 1

12 0.40 1.00 0.57 4

13 1.00 0.80 0.89 5

14 1.00 0.40 0.57 5

15 0.67 1.00 0.80 2

16 1.00 0.67 0.80 3

17 1.00 1.00 1.00 3

18 1.00 1.00 1.00 3

19 0.67 1.00 0.80 2

20 1.00 1.00 1.00 3

21 1.00 0.67 0.80 3

22 1.00 0.60 0.75 5

23 1.00 0.75 0.86 4

24 1.00 1.00 1.00 3

25 1.00 0.75 0.86 4

26 1.00 1.00 1.00 2

27 1.00 1.00 1.00 5

28 0.50 1.00 0.67 2

29 1.00 1.00 1.00 2

30 1.00 1.00 1.00 2

31 1.00 0.75 0.86 4

32 1.00 1.00 1.00 2

34 0.25 1.00 0.40 1

35 1.00 1.00 1.00 5

36 1.00 1.00 1.00 3

37 1.00 1.00 1.00 1

38 1.00 0.75 0.86 4

39 0.50 1.00 0.67 5

accuracy 0.81 120

macro avg 0.89 0.85 0.83 120

weighted avg 0.91 0.81 0.81 120

Confusion Matrix:

[[2 0 0 ... 0 0 0]

[0 2 0 ... 0 0 0]

[0 0 2 ... 0 0 1]

...

[0 0 0 ... 1 0 0]

[0 0 0 ... 0 3 0]

[0 0 0 ... 0 0 5]]

Cross-validation accuracy: 87.25%

out standing

code was wrong

kindly send us screenshot…